Sequence

This site presents video and image example results of the algorithm presented in "Detecting Irregularities in Images and in Video" (ICCV2005).

Conference paper (pdf).

Conference presentation (ppt).

A longer

version of this paper was accepted to IJCV (Special 2005 Marr Prize Issue).

We address the problem of detecting irregularities in visual

data, e.g., detecting suspicious behaviors in video sequences, or identifying

salient patterns in images. The term “irregular” depends on the context in which

the “regular” or “valid” are defined. Yet, it is not realistic to expect

explicit definition of all possible valid configurations for a given context.

We pose the problem of determining the validity of visual data as a process of

constructing a puzzle: We try to compose a new observed image region or a new

video segment (“the query”) using chunks of data (“pieces of puzzle”) extracted

from previous visual examples (“the database”). Regions in the observed data

which can be composed using large contiguous chunks of data from the database

are considered very likely, whereas regions in the observed data which cannot be

composed from the database (or can be composed, but only using small fragmented

pieces) are regarded as unlikely/suspicious.

The problem is posed as an inference process in a probabilistic graphical model.

We show applications of this approach to identifying saliency in images and

video, for detecting suspicious behaviors and for automatic visual inspection for quality assurance.

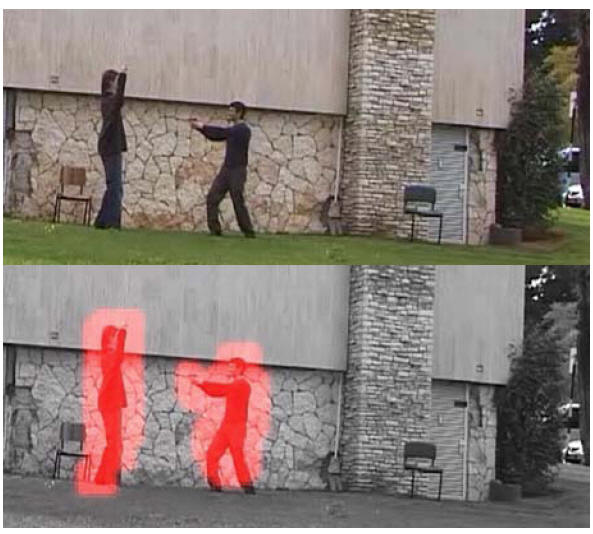

In this example, given a database of walking examples (e.g. a man walking and running) new valid behavior combinations are automatically inferred from the database (e.g., two men walking together, a different person running, etc.), even though they have never been seen before. behaviors which cannot be inferred from the database clips (e.g., a man walking with a gun) are highlighted in red as being “suspicious”. Click on the images below to view the sequences.

|

Database Sequence

|

|

|

Input Sequence

Output - Detected Suspicous Behaviors |

|

Behavioral saliency is measured relative to all the other parts of the video sequence recorded at the same time. In this example, all the people wave their arms, and one person behaves differently. There is no database besides the input sequence. Click on the image below to view the sequence.

|

Input Sequence

Output - Detected Salient Behaviors (in red) |

|

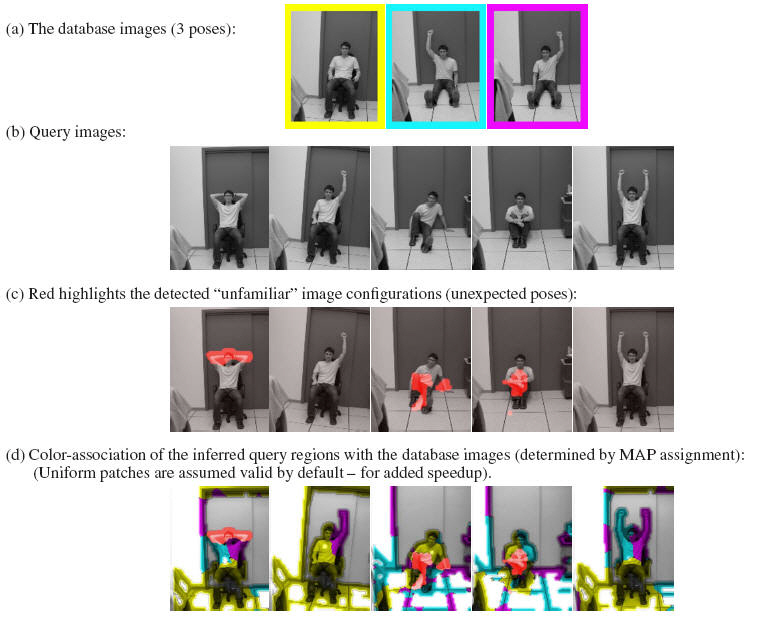

New valid poses are automatically inferred from the database (e.g., a man sitting on the chair with both arms up, a man sitting on a chair with one arm up), even though they have never been seen before. New pose parts which cannot be inferred from the three database images are highlighted in red as being “unfamiliar”. The color association ( in (d) ) indicates for each pixel which database image was used for inferring it (assigned to it the highest likelihood).

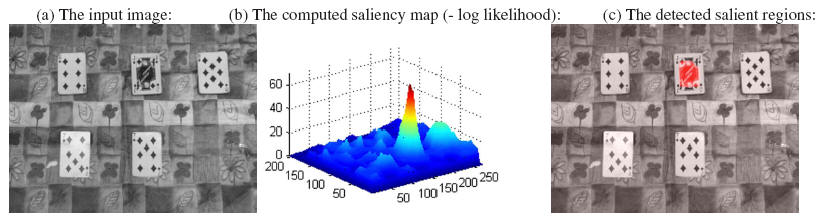

In this application we detect spatial salient regions in a single image by trying to compose it using the rest of the image. The Jack card was detected as salient. Note that even though the diamond cards are different from each other, none of them is identified as salient.

Our approach can be used for automatic visual inspection. Automatic visual inspection is widely used for quality assurance in the manufacture of goods, electronic printed boards, wafers, etc. We identify defects as irregularities relative to a "good" reference. Often, inspected products exhibit repeating patterns (e.g., wafers, fabric, flat panel displays). In these cases we can use our saliency approach to detect defects without any prior examples.

Below we show examples of applying our approach to detect defects in fabric, wafers and fruit.

|

Fabric Inspection (no reference) |

Input

Detected Defects (in red)

|

|

Wafer Inspection (no reference) |

Input Output

Input Output

|

|

Fruit Inspection (a single 'good' reference) |

|