Matching Local Self-Similarities across Images and Videos*

IEEE Conference on Computer Vision and Pattern Recognition 2007 (CVPR'07)

*Patent Pending

Abstract

We present an approach for measuring similarity between visual entities (images or videos) based on matching internal self-similarities. What is correlated across images (or across video sequences) is the internal layout of local self-similarities (up to some distortions), even though the patterns generating those local self-similarities are quite different in each of the images/videos. These internal self-similarities are efficiently captured by a compact local “self-similarity descriptor”, measured densely throughout the image/video, at multiple scales, while accounting for local and global geometric distortions. This gives rise to matching capabilities of complex visual data, including detection of objects in real cluttered images using only rough hand-sketches, handling textured objects with no clear boundaries, and detecting complex actions in cluttered video data with no prior learning. We compare our measure to commonly used image-based and video-based similarity measures, and demonstrate its applicability to object detection, retrieval, and action detection.

Download the CVPR'07 paper in PDF (3MB) format (BibTeX).

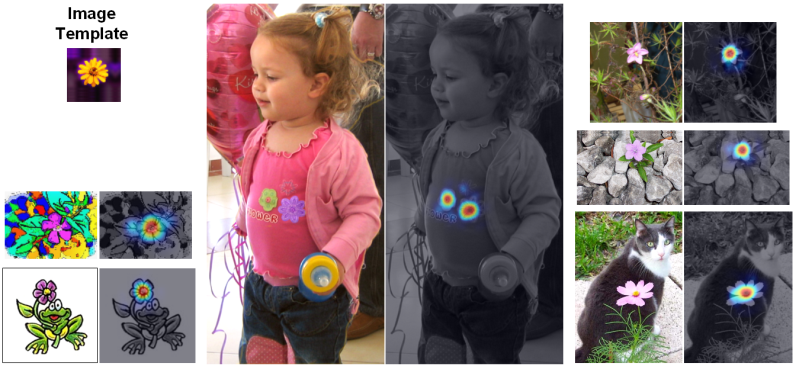

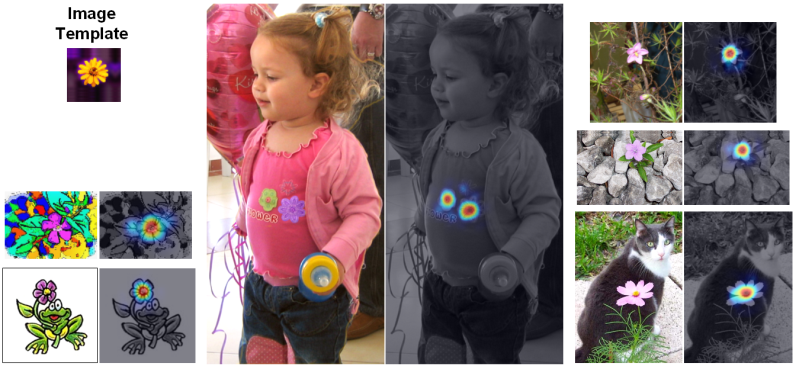

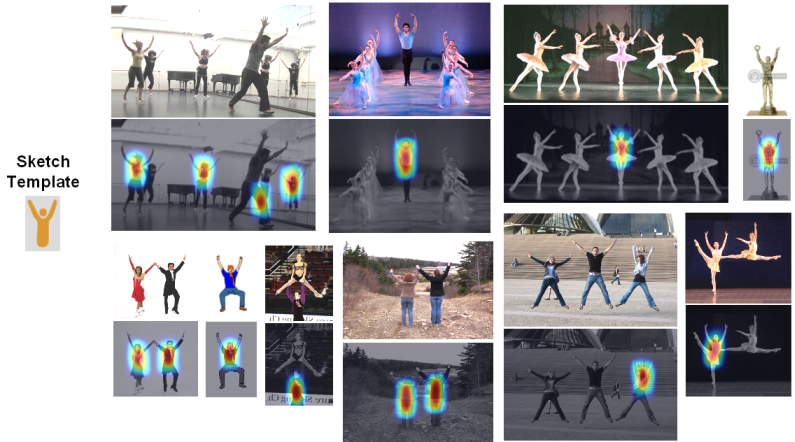

Below are a few examples of object detection in images and action detection in video using the self-similarity descriptors.

See more details and examples in the paper.

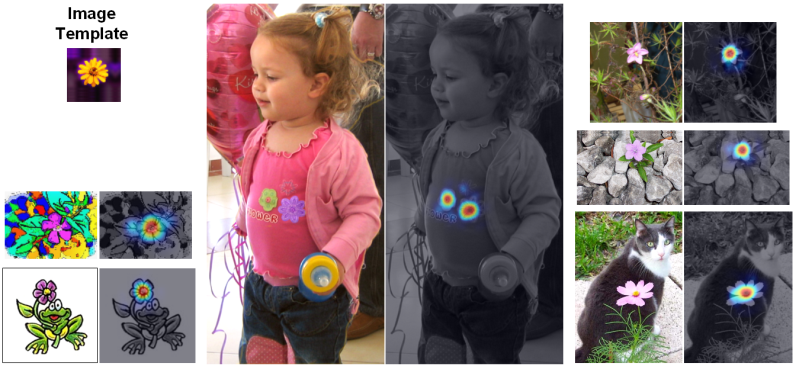

Object Detection in Images

| Detection by an image Template |

|

| Detection by a Sketch |

|

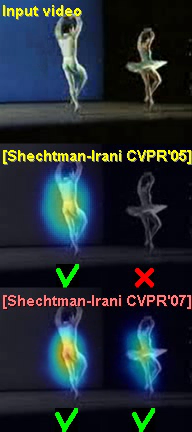

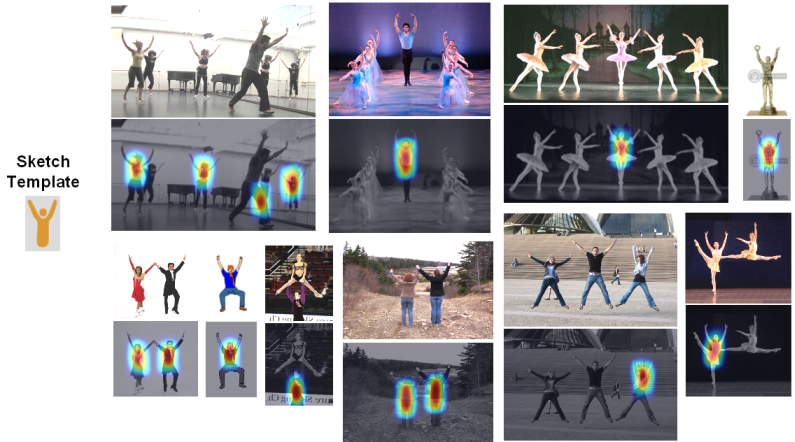

Action Detection in Video

CLICK an image to play the VIDEO

| Ballet template |

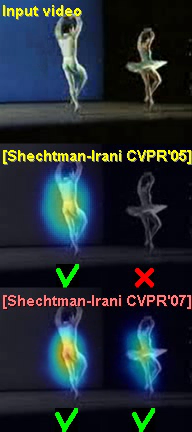

Input vs.

"Behavioral Correlation" vs.

OUR NEW RESULT |

|

|

|

Left - A template video clip (ballet turn). Right - A long ballet video sequence (25 seconds) with two dancers, against which the template was compared. The middle row shows the detection results obtained by our previous method ([Shechtman and Irani, CVPR'05]), where there are 2 missed detections and 4 false alarms (marked by red ”X”). The bottom row shows our new results: all instances of the action were detected correctly with no false alarms.

|

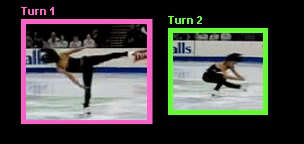

| Ice-skating templates |

Input vs.

OUR RESULT |

|

|

|

Left - Action templates of two different ice-skating turns. Right - A long ballet video sequence (30 seconds) of a different ice-skater, and the corresponding detection results below. All instances of the two actions were detected correctly with no false alarms, despite the strong temporal aliasing.

|

@inproceedings{SelfSim_ShechtmanIrani07,

author = {Eli Shechtman and Michal Irani},

title = {Matching Local Self-Similarities across Images and Videos},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition 2007 (CVPR'07)},

month = {June},

year = {2007},

ee = {www.wisdom.weizmann.ac.il/~vision/SelfSimilarities.html},

}

Contact Details

For further details please contact: Eli Shechtman

Page last updated: 9-May-2007