What Does the Scene Look Like from a Scene Point?

M.Irani, T.Hassner and P.Anandan

Abstract

In this paper we examine the

problem of synthesizing virtual views from scene points within the scene,

i.e., from scene points which are imaged by the real cameras. On one hand this

provides a simple way of defining the position of the virtual camera in an

uncalibrated setting. On the other hand, it implies extreme changes in

viewpoint between the virtual and real cameras. Such extreme changes in

viewpoint are not typical of most New-View-Synthesis (NVS) problems.

In our algorithm the virtual

view is obtained by aligning and comparing all the projections of each

line-of-sight emerging from the “virtual camera” center in the input views. In

contrast to most previous NVS algorithms, our approach does not require prior

correspondence estimation nor any explicit 3D reconstruction. It can handle any

number of input images while simultaneously using the information from all of

them. However, very few images are usually enough to provide reasonable

synthesis quality. We show results on real images as well as synthetic images

with ground-truth.

Full paper : ECCV2002-NVS.pdf (1,175kb)

Some results

Example 1 : The Coke-Can Scene

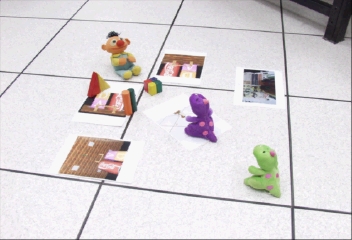

Example 2 : Folder Scene

|

Input images

(Click to display images) : |

|

|

|

|

|

Synthesized

view from the green

dinosaur : |

Synthesized

view from the purple

dinosaur : |

|

|

|

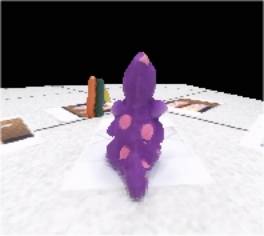

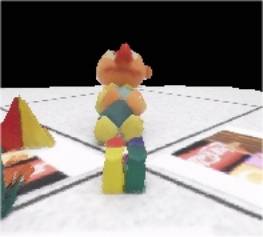

Example 3 : Pupet Scene

|

Input images (Click to

display images) : |

|

|

|

|

|

Synthesized view from the green dinosaur : |

Synthesized view from the purple dinosaur : |

|

|

|

To the Weizmann Institute of Science Home Page

Faculty of Mathematics and Computer Science

Last update : July 8, 2002

For questions and remarks contact hassner@wisdom.weizmann.ac.il