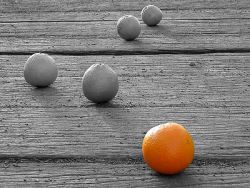

Drop The GAN: In Defense of Patch Nearest Neighbors as Single Image Generative Models

Accepted to CVPR 2022Niv Granot, Ben Feinstein, Assaf Shocher, Shai Bagon, Michal Irani

[arxiv] [Code]

Abstract

Image manipulation dates back long before the deep learning era. The classical prevailing approaches were based on maximizing patch similarity between the input and generated output. Recently, single-image GANs were introduced as a superior and more sophisticated solution to image manipulation tasks. Moreover, they offered the opportunity not only to manipulate a given image, but also to generate a large and diverse set of different outputs from a single natural image. This gave rise to new tasks, which are considered "GAN-only". However, despite their impressiveness, single-image GANs require long training time (usually hours) for each image and each task and often suffer from visual artifacts. In this paper we revisit the classical patch-based methods, and show that – unlike previously believed – classical methods can be adapted to tackle these novel "GAN-only" tasks. Moreover, they do so better and faster than single-image GAN-based methods. More specifically, we show that: (i) by introducing slight modifications, classical patch-based methods are able to unconditionally generate diverse images based on a single natural image; (ii) the generated output visual quality exceeds that of single-image GANs by a large margin (confirmed both quantitatively and qualitatively); (iii) they are orders of magnitude faster (runtime reduced from hours to seconds).

Supplementary Material

1. Diverse image generation based on a single image

2. Conditional Inpainting

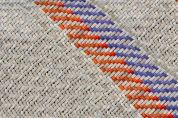

3. Structural Analogies

4. Retargeting

5. Collage

6. Editing

7. User-Study Images

8. Runtime and Memory

9. Implementation Details

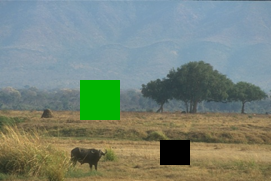

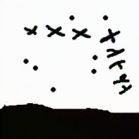

Single Source Image

GPNN (Ours)

SinGAN [23]

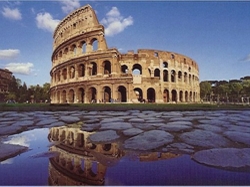

Source Image

Source Image

Source Image

Source Image

Source Image

Source Image

Source Image

Source Image

Input A

Input B

A to B

B to A

Input A

Input B

A to B

B to A

Input A

Input B

A to B

B to A

Input A

Input B

A to B

B to A

Input A

Input B

A to B

B to A

Input A

Input B

A to B

Input A

Input B

A to B

Source

GPNN (Ours)

Source

GPNN (Ours)

Source

GPNN (Ours)

Source

Ours

Source

Ours

Source

GPNN (Ours)

Ours

Source

GPNN (Ours)

Source

Ours

Source Images

GPNN (Ours)

Source Images

GPNN (Ours)

Source Images

Source

Source Image

Edit

Source Image

Edit