| Theory

and Thermodynamics |

| What

do theory and practice of electronic computers say about energy consumption?

In

practice, electronic computers require huge amount of energy and they generate

a lot of heat while computing. As a result the task of cooling down the

computer components is a central problem in computer engineering. This

problem prompted several prominent computer scientists such as C. H. Bennett,

R. Landauer, E. Fredkin and T. Toffoli to investigate intrinsic limits

on a computer energy consumption and generation of waste heat. Their research,

spanning three decades, came to a conclusion that a computation process

may be performed reversibly, generating zero waste. Moreover, they concluded

that most computer operations could be performed without external energy,

although such computation would take infinitely long time and introduce

high error rate. However, one operation was singled out for its intrinsic

demand for energy investment. This is the őreset‚ operation: setting an

unknown bit to zero. The őreset‚ operation would decrease the entropy of

the system, which must be compensated by putting some energy into the system

in order to comply to the second law of thermodynamics. |

|

| How

does DNA store energy?

őEnergy

storage‚ is somewhat evasive term. From high-school mechanics we learn

that potential energy is something that may be exploited to do some work.

A physical system tends to rearrange from a state with high energy to a

state with lower energy and perform some work due course. A stone raised

above the ground gains potential energy and as it falls, it may break whatever

it hits, or just heat the ground a little bit. So the notion of potential

or stored energy is not an absolute but rather a relative property. In

a same sense, a DNA polymer molecule stores significant amount of energy

relative to the same polymer broke into little pieces - its monomers. The

DNA molecules do not fall instantly apart due to high energetic barriers

that confine the polymer in a kind of an energetic őwell‚. However, nuclease

enzymes take the polymer over these energetic barriers all the way to breaking

apart. As a result, energy is released. The fate of this energy may depend

on whether we may utilize it or not. For example, breaking of the chemical

bonds of the ATP, which is chemically similar to the kind of bond broken

in DNA decomposition, is exploited by the cell to fuel numerous biomolecular

transformations. This is in essence equivalent to throwing down a stone

in order to raise some other stone further up using a lever. Energy released

by DNA decomposition may also be exploited to do useful things, such as

molecular computation.

Another

kind of energy may be stored in single-stranded DNA molecules relative

to the hybridized, double-stranded version. In this process no chemical

bonds are either broken or formed, but rather many weak non-covalent bonds

are formed. |

|

| Was

DNA ever used to fuel other non-computational processes?

Yes.

A number of nano-scale DNA-based mechanical devices were designed by B.

Yurke and colleagues, and N. Seeman and colleagues. These devices perform

conformational changes as a result of various hybridization processes between

their single-stranded DNA components and external single-stranded DNA serving

as őfuel‚. As far as we know, decomposition of DNA was never used to drive

any useful processes.

|

|

| How

is our computer fueled by its input?

As

we mentioned, DNA would break into pieces unless high energetic barriers

would prevent it from doing it. Nuclease enzymes lower these barriers and

allow DNA to decompose. Some of the nucleases cleave very precisely at

certain locations, determined by the exact sequence of the nucleotides

at or near their cleavage sites. Yet, even such specific cleavage would

only generate useless heat. In our molecular computer we use this energy

to drive a useful process, namely computation. It should be understood

that on the physico-chemical level we have a sequence of chemical reactions,

resulting in a certain chemical product. This chemical process would not

happen if energy would not be released at each step. The energy is indeed

released because we break DNA into smaller pieces with the help of FokI

restriction nuclease. At a conceptual level, the sequence of chemical reactions

are steps of computation, and the final product is a computational output.

The whole computation process and output formation depend on the energy

stored in the starting material, a molecule encoding an input data. Therefore,

the computation (an abstract notion) is made possible to the (physical)

energy stored in the (physical implementation of its) input relative to

the energy stored in its output. There are no other sources of energy;

the computation is fueled solely by its input. |

|

| Computer

science |

| What

exactly is a state machine and a finite automaton?

Generally,

a state machine consists of 1) a data tape divided into cells, each containing

a symbol selected from the tape alphabet, and 2) a computing device driven

by transition rules. The device is positioned over one of the cells and

is in one of a finite number of internal states. Depending on the symbol

detected and on the internal state, a transition rule instructs the device

to write a new symbol, change state and move one cell to the left or to

the right. The Turing machine is the most general type of state machine,

capable of writing on the tape as well as moving in both directions.

A

more restricted, yet important, class of state machines is finite automata.

A finite automaton is a unidirectional read-only Turing machine. Its input

is a finite string of symbols. It is initially positioned on the leftmost

input symbol in a default initial state, and in each transition moves one

symbol to the right, possibly changing its internal state. Its software

consists of transition rules, each specifying a next state based on the

current state and current symbol. A computation terminates after the last

input symbol is processed, the final state being its „outputš. Alternatively,

it may suspend without completion when no transition rule applies. Some

states are deemed accepting. An automaton accepts an input if there is

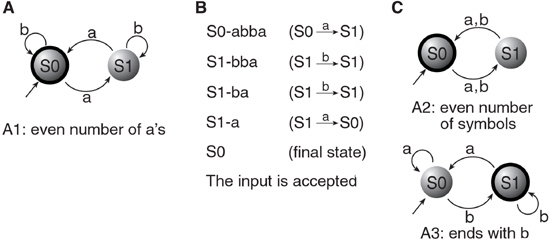

a computation with this input that ends in an accepting final state. A

finite automaton with two states and an alphabet of two symbols is shown

in Fig. 1A. It determines if an input over the alphabet {a, b} contains

an even number of a‚s. Fig. 1B shows the intermediate configurations obtained

during a computation of this automaton. A two-state two-symbol automaton

can have eight possible transition rules. Programming such an automaton

amounts to selecting transition rules and deciding which states are accepting.

A selection of such programs is shown in Fig. 1C

.

Fig.

1. Finite automata and inputs for which we show molecular realizations.

(A) Automaton A1. Incoming unlabeled arrow marks the initial state, and

labeled arrows represent transition rules. A double circle represents an

accepting state. (B) A computation of A1 scanning and digesting the input

abba. Each row represents an intermediate configuration showing current

state and remaining input. The transition rule applied is shown in brackets.

(C) The two other automata for which we demonstrate molecular operation.

|

|

| Implementation

details |

| What

are the similarities and the differences between the current computer and

the previous one?

Similarities:

1)

Both implement two-state two-symbol finite automata

2)

Both have stretches of double-stranded DNA to encode the input strings.

Both work on artificial inputs of well-defined structure rather than on

any DNA molecule

3)

Both encode current state and symbol in a single-stranded sub-sequence

of the input symbols.

Differences:

1)

The new computer does not use ATP and is fueled by its input; the old one

relied on ATP as its energy source.

2)

The new computer recycles its software and hardware; the old one consumed

one software molecule per transition.

3)

The new computer is 50 times faster and performs 8000 times more operations

in parallel than the old one

4)

The new computer can process much longer input strings. We could routinely

compute on inputs 12 symbols long but also got results with 20-symbols

long strings. The yield of a single step rose above 98%. The old one could

only process 6-symbol inputs on a good day, with about 50% single step

yield

5)

The new computer has a completely redesigned structure, making possible

all the improvements summarized above

|

|

| Is

there an increase in error rate as a result of speeding the computation

up?

No.

The error rate is more or less constant under different conditions employed |

|

| Laboratory

procedure |

| How

do we construct the molecular components?

All

DNA molecules are ordered from a manufacturer that supplies single-stranded

DNA molecules according to specification (exact sequence, amount, mode

of purification). We then purify the molecules and employ various chemical

procedures to get to the desired molecular structures (such as stepwise

ligation of DNA blocks to build long synthetic inputs) |

|

| How

is the computation performed?

We

mix all the components of the computer: its radioactively-labeled input,

software and hardware in 10 microliter of solution and let the process

run for several minutes at 8oC. We then analyze the outcome

by denaturing gel.

|

| What

is radioactive labeling?

Using

naturally occurring enzyme and radioactive ATP molecules we can put a radioactive

isotope of phosphorous on a terminus of a DNA strand. This label is very

sensitive and can supply quantitative information. |

|

| What

is denaturing gel electrophoresis and how does it work?

You

have a gel with two sides attached to electrodes, and place the DNA near

the minus electrode. The gel is heated and it contains urea, which causes

strand dissociation, so that each single strand is on its own. The DNA

strands travel slowly inside the gel towards the plus electrode, at a speed

that is a function of the molecule size. If you stop the process at the

right time, you have a spread of the molecules according to their length.

If you add on the side a DNA mix with a "ladder" of DNA molecules of known

lengths, you can determine the lengths of the different DNA molecules in

the original solution. Some of the molecules are labeled by radioactive

phosphorous, and we can see their location on a gel using special sensitive

plates and readout device. |

|

| General

Questions |

|

| Could you describe your work in general terms?

In a feat of molecular nanotechnology, scientists at the Weizmann Institute were able to construct a self-contained, programmable computing device using only three types of molecules --- a DNA input molecule, encoding the data and providing the fuel for the computation, DNA software molecules, encoding the rules of computation, and a hardware molecule, a DNA-cutting enzyme. Combining an input data and an energy supply for the computation in the same physical entity is unthinkable of in the realm of electronic computers. The computing device is so small that 3 trillion such devices can reside in one microliter of solution [or - 60 trillion devices in a teardrop], a fact that won it the Guinness World Record for the world's smallest biologic al computing device.

|

|

What do you find most surprising or exciting about these results?

A DNA molecule stores information (the genetic code) and energy. Nature uses DNA as an information storage, but does not exploit it as energy supply. Moreover, previous DNA computers used ATP, energy currency of biochemical processes, as a power source. Our experiments demonstrate for the first time that we may use a DNA molecule as an input for computation, and at the same time to fuel this computation by the energy stored in the very same molecule. Such combination, although theoretically concievable, is practically impossible with conventional electronic computers.

Also, as a result, we were able to create a computing device from only three types of molecules -- a DNA molecule providing input data and fuel, a DNA molecule acting as software, and an enzyme acting as hardware. The resulting computing device is so small that it won the GWR as the world's "smallest biological computing device".

|

|

What do you feel is the most important implication of these findings? What do you feel is the point of this project for you?

It is a step forward for using of biomolecules as building blocks from programmable computers. The long term goal is to eventually create autonomous, programmable molecular computing devices that can operate in vivo, eventually inside the human body, and function as "doctors in a cell", detecting anomalies in their biochemical environment and using their programmed medical knowledge to direct the synthesis and delivery of biomolecules that serve as drugs.

On a more scientific level, we developed a biochemical process that makes rather complex computation on biological inputs using energy inherently stored in these inputs. Neither in Nature nor in artificially created system can we find similar patterns of information processing. We hope that our results may change the current view of the interplay between information and energy in both natural and artificial systems.

|

|

How did you become interested in this idea to begin with?

I was an "ordinary" computer scientist and got involved in the Internet industry from its early days. As the industry matured I looked for an interesting new direction, and began self-study of molecular biology. I was fortunate enough both to be approached by bright students, such as Yaakov Benenson, the first author of this paper, and to be granted the facilities from the Weizmann Institute to carry out this interdisciplinary research.

|

|

| Compared

to a normal computer, how does the molecular computer work?

All electronic computers work according

to a mathematical model developed by John von Neumann in the 1940s, called

the stored program computer. In it both program and data are stored as

words in the computer memory. Each memory word can be accessed by its address.

The electronic computer fetches program instructions one by one from its

memory and executes them. Typically the instructions are to load data from

a certain memory location into a register, to store the content of a register

into a memory location, or to perform an operation on registers, for example

to add the content of two registers and store the result in a third register.

The stored program computer model is ideal

for realization using electronic circuits, and hence it has been the basis

of all electronic computers for more than 50 years.

Our molecular computer is based on a very

different model, called a finite automaton, which is a simplified version

of the Turing machine, a mathematical model of computation developed by

the British mathematician Alan Turing in the 1930s. It has data stored

as a string of symbols (Turing envisioned a paper tape divided into squares,

each holding one symbol) and a control unit, which holds the program rules,

is in a particular location on the string and can be in one of a finite

number of states. The Turing machine starts the computation in an initiate

state and on the leftmost symbol in then string. In each cycle a Turing

machine reads a symbol and executes a program rule that specifies what

action to take according to the symbol read and the internal state. The

actions can be to replaces the symbol with a new symbol, move one symbol

to the left or to the right, and/or change internal state. A finite automaton

is a Turing machine that cannot change the string of symbols, and in each

step moves one symbol to the right. Hence the result of the computation

of the finite automaton is the final state reached upon reaching the last

input symbol.

Both the stored program computer and the

Turing machine are so-called "universal computers" and as such have equivalent

computing power. A finite automaton is much weaker.

Our molecular finite automaton has three

components. An input molecule, which stores the data to be processed as

well as the fuel needed for the computation, software molecules, which

encode program rules, and a hardware molecule which performs the computation,

as directed by the software and the input. The molecules float in a watery

solution containing some salts needed for the operation of the hardware

molecule.

The input molecule is a double-stranded

DNA molecule. A mathematical trick is used to store the current state and

current symbol to be processed in a "sticky-end" of the DNA molecule, a

single-stranded protrusion obtained by having one strand longer than the

other. The software molecules also have "sticky-ends", each matching a

different state-and-symbol input combination. Through a spontaneous chemical

process called DNA hybridization, the input molecule and a matching software

molecule temporarily connect to each other. The hardware is a naturally

occurring molecule, an enzyme called FokI that attaches to DNA in a specifically

coded location and cuts both strands at a fixed distance from that location,

creating a sticky end. Using additional chemical and mathematical tricks,

the software molecules are designed so that the hardware enzyme attaches

to them and, once a software molecule hybridizes with an input molecule,

the hardware molecule cuts the input molecule in a programmed location,

exposing a new sticky end that encodes the next state and the next symbol

to be processed. The final sticky end, obtained at the end of the computation,

encodes the computation result.

|

|

| What

exactly can the molecular computer do?

Our molecular computing device realizes

a so-called "two-state two-symbol finite automaton", which can do a rather

limited set of computations, depending on which program molecules are used

(i.e. mixed in the watery solution). For example it can detect if a list

of 0's and 1's has an even number of 1's; if it has at least one 0; if

it has no two consecutive 1's; if it has no 0's after a 1; or if it starts

with 1 and ends in 0.

|

| How

do performances compare with a traditonal computer - a pc for example?

Each molecular computer is rather slow,

on the average it performs one operation per 20 seconds. Also, a PC is

a "universal computer" whereas out molecular finite automaton is very limited

in its computing capabilities. Nevertheless, if we consider the size and

energy consumption, then a spoonful (5 milliliters) of our "computer soup"

contains 15,000 trillion computers that together perform 330 trillion operations

per second, which is more than 100,000 times faster than the fastest PC.

This spoonful releases less than 25 millionth of a Watt, hence its energy-efficiency

is more than a million times that of a PC.

|

| What

will the impact of this research be?

This is basic research at an early stage,

it may be too early to predict its impact. However we envision biomolecular

computing devices operating as "smart components" in biochemical reactions,

which may have pharmaceutical and biomedical applications in the future.

|

|

Where do you plan to go from here in your research?

There are several directions in which our work can be improved. One is the creation of more powerful computing devices. Another is making the devices operate "in vivo", and the third is looking for shorter-term applications in biotechnology and biomedical research.

|

|

What obstacles do you foresee in future research or development?

The main hurdle, which will take a decade or so to overcome, is science's inability to synthesize "designer enzymes". We can specify our needs precisely, we, unlike in electronic computers, science does not know how to create enzymes that meet our needs.

|

|

| Can

we expect to see this work applied in the future?

In the normal course of events it may take

decades for this research to be applied. But basic research sometimes has

surprising and unexpected ways in which it may be applicable.

|

| What

is new about this latest work, compared to previous research into biological

computing?

The key conceptual advance, compared to

our earlier molecular automaton published more than a year ago, is that

the new automaton uses its DNA input as its source of fuel, hence it does

not need an external fuel supply. In addition, in our previous molecular

automaton, each computational step consumed one software molecule, and

hence the computation needed an ongoing supply of software molecules. The

new automaton does not, so the net result is that a fixed set of software

and hardware molecules can process any input molecule, of any length, without

an external supply of energy.

|

| What

other work has been done in this field?

The field started in 1994 with the publication

of a paper by Len Adleman, describing how one can use DNA to solve combinatorial

problems, such as the so-called "traveling salesman problem" which is to

find an optimal route through several cities, given distances between them.

The hope was that the parallel nature of DNA manipulation can be used to

outperform electronic computers in solving such problems.

This approach involves multiple outside interventions during computation. A solution containing the molecular components is subjected to mixing, separations, PCR amplification, etc several times during computation. Therefore, it is not truly "molecular computers" but rather "lab-assisted molecular computers", where the lab personnel plays a crucial role in accomplishing a computation.

Today fewer people believe this can

be achieved due to inherent limitations of the approach and the rapid advance

of electronic computers. Our work does not attempt to compete with electronic

computers head-on, but rather to design molecular-scale computing devices,

which might have applications in areas not accessible to electronic computers,

such as "smart components" in biochemical reactions.

Another line of research is "semi-autonomous molecular computation", which is closer to our work. This direction is pursued by Hagiya and Sakamoto in Tokyo University, and by Winfree, Reif and Seeman in the USA. This approach aims at creating systems that evolve in a programmed fashion to perform a computation, while minimizing the external intervention. These researches were successful in performing very simple computations without much interference except regulating the temperature during the process (in a PCR manner - multiple cycles of heating/cooling). Still, experimentally demonstrated computation capabilities of these systems is substantially lower than that of our devices, and they are not completely autonomous.

The self-sufficiency is crucial for the future success of such devices, as we cannot control the processes on the cellular level from the outside. Moreover, our recent result demonstrates that useful computation may be performed on the expense of biopolymer degradation. As biopolymers are constantly degraded in a cell, we might envision "smart components" that utilize this degradation without placing any additional energetic burden on a cell.

|

|

Are there any questions or criticisms you feel others might have about these findings?

Only that it is very early stage basic research, if this is considered criticism.

|

|

If

so, would it be part of some machine I would recognize as a computer,

or if not, what

would it look like in use?

No, we are aiming at molecular computers

that operate in a biochemical environment.

|

| Since

DNA stores both information and energy, did you manipulate the molecules

to retain certain information and energy, or were these present inherently?

We synthesized the molecule to containing

the input information we wanted to test in a computation. For example

if we wanted to run the computation on the list "aabba" we synthesized

a DNA molecule that encodes that list, using standard lab techniques.

The energy is inherent to the chemical structure of DNA, and is independent

of the actual data it encodes, only on the length of the molecule.

|

| From

what source (animal, human) did you obtain the DNA and the Fokl?

DNA synthesized in a lab using standard

techniques, we purchased it according to out spec from outside supplier.

FokI enzyme originally comes from a bacteria named Flavobacterium okeanokoites.

It is currently produced with the help of recombinant E. coli strain that

overexpresses the gene for this enzyme.

|

| Must

the computer operate in a liquid solution?

Yes, all these chemical reactions can only

happen in water solution at certain pH values and salt concentrations.

These conditions (pH and salt) are required to allow the normal function

of the hardware enzyme FokI.

|

| Is

there a finite lifespan to the computer, and the number of operations it

can perform?

Theoretically no, but enzymes are known

to get "tired" after a while, so fresh enzyme supply, and cleaning debris,

might be needed after long computations.

|

| The

PNAS summary states that the device has been named "the world's smallest

biological computing device." Can we also say that it's the world's smallest

computer? If not, why would that be inaccurate?

"Computer" normally refers to general-purpose

programmable universal computing device. Our computer is programmable

but is not universal (there are computing tasks it inherently can't do)

since it is a realization of a weak model of computation called "finite

automaton". Hence the caution in the phrasing.

|

| How

large are the molecules and how do they compare to biological human DNA?

We

use 8 base pairs per input symbol, with some tail, for the input, and short

oligos for the software molecules, with one strand 4 nt longer than the

other (Fig. 2 of the PNAS paper). Each of the software molecules is about

20 base pairs long. They are artificially constructed molecules, much much

shorter than human DNA.

|

| What

were the challenges you had to overcome to make the system run?

The

old molecular automaton did not work without ATP, although it could make

one or two steps, encouraging us to investigate the phenomenon more closely.

Making FokI to cleave DNA via noncovalent bridge proved to be a tricky

task and required many trial-and-error attempts before arriving at a working

design. Making the computer work fast was another problem and we had to

use a hardware enzyme in a stochiometric rather than catalytic amount. |