- The setup: scene and cameras

Two cameras (one visible light PAL, the other infra-red NTSC) are placed next to each other and capturing the same distant scene.

- Input sequences

| Camera 1 (visible light) | Camera 2 (infra-red) |

|---|---|

|

|

| Video: AVI 1.4Mb MPEG 1.65Mb | Video: AVI 600Kb MPEG 1.15Mb |

- Detect moving objects

... using background subtraction.- Extract interest points

... by taking the centeroid of each blob, in each frame.Construct trajectories from these points

| Camera 1 (visible light) | Camera 2 (infra-red) |

|---|---|

|

|

- Use the trajectories as features for matching algorithm

- Recover homography (spatial alignment) and time shift (temporal alignment)

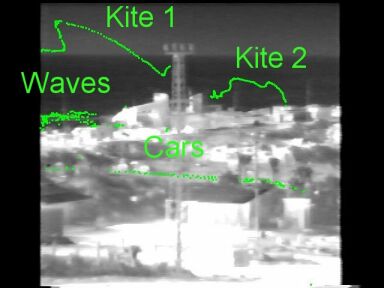

| Fused images (visible light and infra-red) |

|---|

|

and the kite marked with green circle - in visible light.

In the fused sequence we clearly see both kites.