Contour-Based Joint Clustering of Multiple Segmentations

Daniel Glasner*,

Shiv N. Vitaladevuni*, and Ronen

Basri

* Equal contribution authors, listed alphabetically.

Paper [PDF] [bibtex]

Supplementary Material [PDF]

Slides [PPTX]

Abstract

We present an unsupervised, shape-based method for joint clustering of multiple

image segmentations. Given two or more closely-related images, such as close frames

in a video sequence or images of the same scene taken under different lighting conditions,

our method generates a joint segmentation of the images. We introduce a novel contour-based

representation that allows us to cast the shape-based joint clustering problem as

a quadratic semi-assignment problem. Our score function is additive. We use complex-valued

affinities to assess the quality of matching the edge elements at the exterior bounding

contour of clusters, while ignoring the contributions of elements that fall in the

interior of the clusters. We further combine this contour-based score with region

information and use a linear programming relaxation to solve for the joint clusters.

We evaluate our approach on the occlusion boundary data-set of Stein et al.

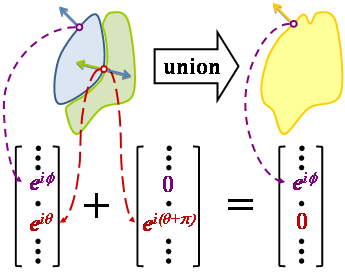

Additive representation: A segment, or a union of segments, is represented by a complex-valued vector. Non-zero entries represent the angle of the normal to the edge elements along a segment's contour. The blue and green segments share a common boundary. Therefore, their representations share non-zero entries for edge elements at the common portion of the boundary. However, the normal angles at these common boundaries differ by $\pi$. As a result when the two representation vectors are added, the entries of the shared edge elements vanish. These are exactly the edge elements in the interior of the union. Consequently the resulting vector represents only those edge elements which lie along the exterior boundary of the entire union.}

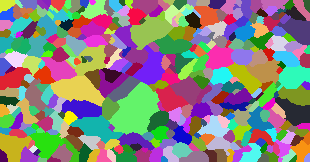

Joint clustering: oversegmentations of two consecutive frames from

the `squirrel3' sequence of the

Video Dataset for Occlusion/Object Boundary Detection are generated using

a watershed transform (first and second rows). Our shape-based joint clustering

method seeks to maximize the agreement between clusters of super-pixels across images.

The result obtained by our algorithm is shown in the bottom row. Each segment is

shown with its average color and surrounded by a white outline.